Behind the Screens

The Convergence of HDTV and AI

A New Cognitive Frontier

A New Cognitive Frontier

Artificial Intelligence now exceeds human capabilities by several orders of magnitude including speed, volume, memory, and structured problem-solving, a transformation underscored by advances in large-scale models and high-performance computing. In terms of processing speed, AI systems such as GPT-4 and AlphaZero can execute tasks in microseconds from milliseconds, operating up to six to nine orders of magnitude faster than the human brain. Volume-wise, cloud-deployed AI can manage hundreds of thousands of parallel queries per second, compared to human throughput, which is limited to only a few cognitive tasks at once. Regarding memory, modern transformer models trained on hundreds of billions of tokens exhibit near-perfect retention with more exact data recall than the human brain. In problem-solving domains such as strategic games, mathematics, and medical diagnostics, AI systems like AlphaZero, GPT-4, and xAI’s Grok-3 have achieved superhuman performance, solving problems with an advantage of two to six orders of magnitude, particularly in environments requiring speed, logic, and pattern recognition. Most recently, Grok 4 and Grok 4 Heavy are surfacing, demonstrating intelligence levels surpassing those of most graduate students across multiple disciplines simultaneously, a notion that, just a year ago, would have been considered utterly implausible.

Screens That Think

As (AI) becomes increasingly embedded into consumer technologies, one of the most compelling domains for its transformative potential is high-definition television (HDTV). Once a passive entertainment medium, HDTV is evolving into an intelligent, adaptive, and immersive interface. This article explores the future of HDTV through the lens of AI integration, examining how advancements in computer vision, natural language processing, content recommendation, autonomous calibration, and real-time environment sensing will redefine the viewing experience. It also discusses the architectural and signal management shifts needed to accommodate AI-driven operations, including edge computing, advanced HDMI/DisplayPort telemetry, and sensor-based interfaces. It finally outlines the challenges faced with the work place in a post AI environment.

High-definition television has undergone several paradigms shifts since its commercial emergence in the early 2000s. From 720p and 1080p resolutions to the widespread adoption of 4K and now 8K displays. These leaps have largely focused on visual fidelity and data bandwidth. However, as AI technologies mature and become more pervasive, a new horizon is unfolding, one in which the television not only renders pixels but understands context, anticipates viewer behavior, and optimizes itself autonomously. This transition is fueled by developments in machine learning algorithms, real-time signal processing, embedded systems, and multi-modal user interfaces. The HDTV of the future may have the ability to function less as a screen and more as an intelligent multimedia node capable of reasoning, adapting, and participating in its user’s cognitive ecosystem.

When Television Starts Thinking for Itself

AI’s role in consumer electronics began modestly, often limited to basic voice assistants or content recommendations. However, the integration of deep learning and neural networks into silicon via AI accelerators (such as NVIDIA’s TensorRT or Apple’s Neural Engine) has significantly expanded local inferencing capabilities. In modern smart televisions, AI now has the potential to handle tasks such as facial recognition for user profiles, real-time upscaling (e.g., Samsung’s AI Quantum Processor), and adaptive brightness, sharpness, contrast, focus and sound optimization.

The next evolution can go even further becoming a decision-making nucleus of HDTV, controlling every subsystem from signal decoding to environmental sensing and content delivery strategy. For instance, AI could dynamically alter decoding strategies based on perceived viewer attention or mood using integrated biometric sensors. For example, a viewer returning home after a stressful workday may be presented with calming nature content without having to request it. The television will interpret facial expressions, vocal tones, and ambient household sounds to intelligently select appropriate content. Over time, it will learn the user’s behavioral baseline, enabling it to make strategic decisions and proactively schedule content. Ultimately creating personalized rituals and programming blocks aligned with the viewer’s daily rhythms. Viewers may no longer browse as they will be guided through an immersive, coherent content landscape shaped by AI into a virtual concierge experience. HDMI and DisplayPort protocols could evolve to include bidirectional AI telemetry, sending not just video data but also AI states, performance logs, and even prediction results between source and sink devices.

Television That Thinks: The End of Passive Viewing

AI-controlled HDTV could excel in five foundational areas: autonomous calibration, ambient awareness, adaptive content delivery, personalized interactivity, and predictive maintenance.

Autonomous Calibration uses computer vision algorithms to adjust parameters such as contrast, color temperature, brightness and sharpness all based on environmental ambient light, wall color, and even screen reflections. This is huge allowing for no matter what the environment may be or what it may change to over time. These calibrations, driven by reinforcement learning, could very well eliminate the need for manual adjustments entirely.

Ambient Awareness combines microphones, IR sensors, and cameras to gauge viewing conditions and user presence. Using edge-deployed computer vision models, the television may detect how many people are watching, what their distance from the screen is, or whether they’re even engaged. This data can inform decisions like pausing playback when the viewer looks away or enlarging subtitles for distant viewers.

watching, what their distance from the screen is, or whether they’re even engaged. This data can inform decisions like pausing playback when the viewer looks away or enlarging subtitles for distant viewers.

Adaptive Content delivery hinges on deep learning models trained on metadata, social context, and historical preferences. Rather than using static recommendation engines, AI HDTV could sequence content across platforms using transformer-based models similar to GPT or BERT, enabling thematic continuity, mood-based suggestions, or even family-wide content orchestration.

Personalized Interactivity includes real-time natural language interfaces, computer vision for gesture controls, and adaptive accessibility features. For example, AI could tailor audio frequencies on the auditory profile of each viewer including frequency response, time coordination, and dispersion. Not even mentioning the benefits for the hearing-impaired users. In educational or commercial settings, the television may identify the speaker and provide real-time transcriptions or summaries on screen interactively.

Predictive Maintenance applies anomaly detection algorithms to internal diagnostics, monitoring temperature, voltage rails, signal noise, and memory usage to detect failing components or recommend firmware updates preemptively. Data may be cross-referenced anonymously with cloud-based AI to detect model-specific trends.

Embedding Intelligence into a Display Infrastructure

The future of AI-controlled HDTV may require a fundamental shift in architecture, including compute placement, signal telemetry, and interface design.

Most existing smart televisions rely on centralized system-on-chips (SoCs) for both media processing and application logic. In an AI-centric architecture, dedicated neural processing units (NPUs) could be embedded both in the television and with upstream sources such as media players, gaming consoles, or set-top boxes. HDMI 2.1 and beyond could serve not just as video pipelines but as control buses for AI command and feedback data.

To accommodate the bandwidth and latency needs of AI telemetry, new standards similar to HDMI’s Fixed Rate Link (FRL) could be adapted to include low-latency sidebands specifically for AI state exchanges. Telemetry data such as facial detection results, audio localization maps, or network congestion stats could travel alongside the video stream for synchronized decision-making.

Moreover, DisplayPort 2.1’s support for tunneling and multiple stream transport (MST) offers an ideal structure for layered data delivery, such as rendering video, transmitting AI directives, and returning sensory data, all in a unified cable interface.

The AI stack is likely to adopt a hybrid architecture, with real-time perceptual models such as audio and video analysis, while more complex inference refinement and model retraining can occur in the cloud. Control policies are then delivered to the device from AI lifecycle managers (ALMs), ensuring adaptive and coordinated behavior. Edge AI frameworks like Google’s Edge TPU and Intel’s OpenVINO can serve as the foundational platforms enabling localized inferencing directly within the HDTV.

Machine Learning Meets a Digtal Interface

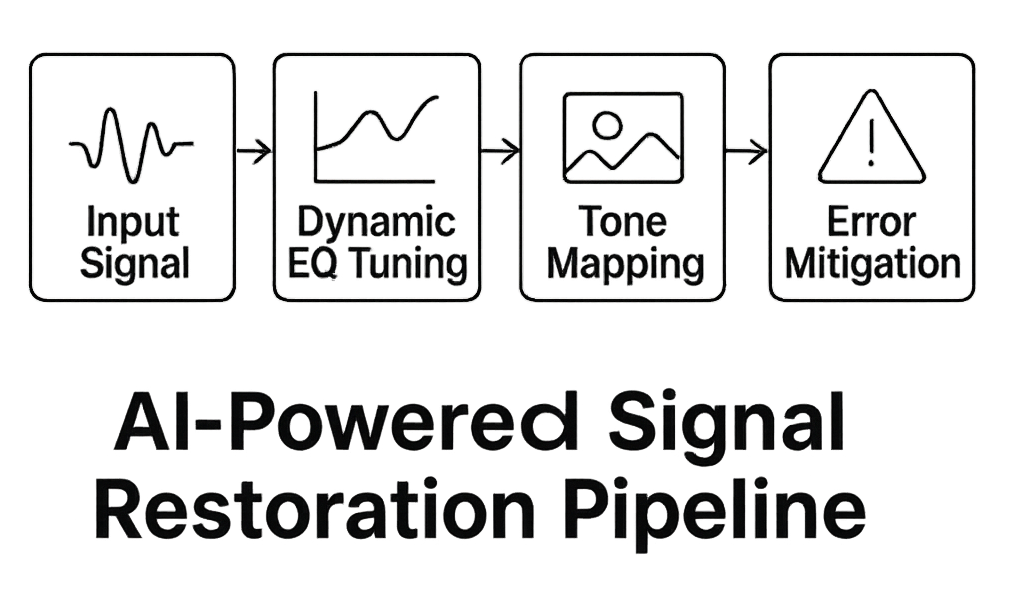

One of the lesser discussed but vital roles of AI in HDTV is real-time signal adaptation. HDMI and DisplayPort have historically relied on fixed EQ curves and Forward Error Correction (FEC) at the PHY layer particularly to correct for any transmission line losses. As resolutions increase (especially with 8K and beyond), maintaining signal integrity becomes more difficult.

AI models can be trained to perform adaptive equalization as in HDMI 2.1 by learning channel characteristics on-the-fly. Instead of using fixed pre-emphasis and de-emphasis curves, the television’s receiver may actively monitor eye diagrams via machine learning and adjust signal restoration parameters for optimum performance while also using historical data models.

This extends to color correction and HDR tone mapping. AI-enhanced dynamic tone mapping interprets metadata in real-time and reconstructs frames in a perceptually optimal way, potentially outperforming static HDR10+ approaches.

Privacy and Ethical Concerns

AI-controlled televisions, by necessity, incorporate microphones, cameras, and extensive telemetry to enable most of their advanced features. This raises significant ethical and regulatory concerns. To mitigate these concerns, future HDTV may need hardware switches to disable sensors, on-device AI that performs all recognition tasks locally (edge AI), and transparent data usage policies. Federated learning may emerge as a core strategy, allowing models to learn across multiple devices without transferring raw data to the cloud.

Regulatory frameworks like the EU’s AI Act and California’s Consumer Privacy Act (CCPA) will likely shape how these televisions are marketed and sold. Manufacturers may be required to offer a “privacy mode” that disables AI features or anonymizes all data.

Additionally, bias in content recommendation systems will need oversight. If AI curates content unevenly across demographic lines, it may reinforce stereotypes or introduce echo chambers. The industry will need to define guidelines for ethical content personalization.

AI Television in Everyday Life

AI-controlled HDTVs will be instrumental in reshaping not just entertainment but also education, healthcare, and commerce.

In education, AI can curate educational videos based on real-time comprehension feedback, pausing or rephrasing based on facial expressions or eye tracking. Teachers can annotate content in real-time using their own gestures.

In healthcare, AI televisions can be used in elder care, reminding patients of medication schedules, detecting abnormal behavior (e.g., falls or wandering), and offering telemedicine services through natural language interfaces.

Retail applications, will include virtual try-ons, AI-driven product showcases, and context-aware advertisements based on inferred household demographics or time of day.

In gaming, AI televisions can reduce input lag dynamically, enhance rendering pipelines through AI upscaling, and even coach users in competitive environments by analyzing gameplay.

When AI Listens: The Reinvention of High-End Audio

With USB-C increasingly positioned as the universal interface for data, power, and media, it opens the door to the seamless integration of high-end audio  devices into intelligent, AI-enabled ecosystems. No longer limited to one-way analog paths, digital-to-analog conversion, or basic audio streaming, USB-C’s robust bidirectional bandwidth capabilities, telemetry, and high-capacity power delivery establish a real-time communication pipeline between source and sink devices. This facilitates the sophisticated coordination and feedback essential for advanced AI-driven audio processing and dynamic system optimization.

devices into intelligent, AI-enabled ecosystems. No longer limited to one-way analog paths, digital-to-analog conversion, or basic audio streaming, USB-C’s robust bidirectional bandwidth capabilities, telemetry, and high-capacity power delivery establish a real-time communication pipeline between source and sink devices. This facilitates the sophisticated coordination and feedback essential for advanced AI-driven audio processing and dynamic system optimization.

This evolution lays the groundwork for a radical leap in audio reproduction, where Artificial Intelligence (AI) reshapes the way sound is captured, processed, and rendered. At the heart of this shift is environmental sensing, the ability of an audio system to continuously analyze its surroundings in real time. Microphones, accelerometers, and even ultrasonic sensors embedded within speakers, headphones, or pre-amps and power amps can all provide a continuous stream of data. AI could then interpret this information to build an acoustic model of the room: identifying dimensions, surface materials, furniture placement, listener position, and fluctuating ambient noise.

Armed with this environmental intelligence, AI-driven audio systems can execute adaptive equalization that adjusts frequency response to compensate for room coloration and phase distortion. Time-domain processing algorithms ensure that sounds from multi-driver or multi-speaker setups arrive at the listener’s ears with precise alignment, correcting for physical distance, depth perception, reflection paths, and delay mismatches. The result is an immersive sound field where the perception of space and distance is rendered with extraordinary realism.

But the possibilities don’t end with spatial correction. AI systems potentially can enhance source material dynamically, performing real-time psychoacoustic optimization. That means understanding the content, whether it’s a live jazz recording, a cinematic explosion, or a whisper in a podcast, while reshaping the sound profile to preserve nuance, clarity, and presence across any environment. USB-C’s support for high-resolution audio ensures that the fidelity of this processing remains uncompromised, and its standardized connector format makes this experience portable and consistent across devices.

The brain does not interpret sound by just frequency and amplitude. Instead, by tailoring the audio output to match the environmental conditions and the psychological expectations of the listener. AI begins to emulate how we perceive sound in the real world. It doesn’t just play back audio, it constructs an acoustic event, one that feels alive, dynamic, and indistinguishable from being physically present at the original performance.

It’s not just better sound. It’s a step closer to auditory reality.

Workforce Challanges and Opprotunities

A growing and pressing concern within the consumer electronics sales and integration sector could be the accelerating erosion of their role, fueled by the rise of AI-powered automation, self-configuring technologies, and predictive diagnostics, advancements that increasingly threaten to bypass traditional integration and support channels entirely.

AI-enabled televisions, home automation hubs, and AV receivers are beginning to auto-detect connected devices, optimize video and audio settings in real time, and even self-diagnose signal or network issues, which could bypass the need for hands-on expertise. Cloud-based control systems with AI-assisted configuration can allow end users to set up complex installations with minimal professional involvement. This trend threatens to commoditize the integrator’s value proposition, especially for residential and small commercial projects where convenience and simplicity now outweigh custom engineering.

Another pressure point is remote support powered by AI. Virtual assistants and machine learning models embedded in CE platforms can often resolve user issues through chat or voice interfaces without technician intervention. Furthermore, large integrator networks or manufacturers can use centralized AI tools to remotely monitor performance across thousands of deployed systems, limiting the need for local, independent integrators. The core concern is that as AI reduces friction in installation and support, the demand for traditional integrator services may decline, shifting revenue toward software-based platforms, subscription services, and manufacturer ecosystems. For integrators to remain viable, they will need to evolve and offer higher-tier services such as network engineering, cybersecurity for smart homes, or custom AI/IoT integrations that go beyond plug-and-play.

This also suggests that future workforce selection will undergo significant changes. A company’s hiring practices will likely shift toward candidates with experience or familiarity in some form of AI. As one source noted, “the consumer electronics industry may need to focus on engaging students still in high school to cultivate the talent that will ultimately be essential in an AI-driven future.”

Ultimately, AI doesn’t eliminate the need for integrators, but it will transform them. The concern is that those who do not adapt to this transformation could be left behind.

The Bottom Line

The fusion of artificial intelligence with high-definition television is not a distant vision, it is an inevitable transformation that redefines how screens integrate into daily life. From autonomous calibration to predictive diagnostics, from adaptive user engagement to ethical content filtering, AI-controlled HDTV can promise an intelligent, participatory media experience. The shift requires both hardware and protocol evolution, such as the embedding of NPUs, the extension of HDMI and DisplayPort to handle AI telemetry, and the deployment of hybrid edge-cloud inferencing stacks. Despite the privacy and ethical challenges, the trajectory points to a screen that doesn’t just display—but thinks, learns, and enhances life contextually.

As AI models advance in both complexity and miniaturization, the television will evolve from a passive peripheral into an active assistant. Spaces like the living room, classroom, hospital, and retail floor could be transformed by adopting forms and functions previously unimaginable.